Chapter 5: Publicizing Your Site (Without Irritating Everyone on the Net)

by Philip Greenspun, part of Database-backed Web Sites

Note: this chapter has been superseded

by its equivalent in the new edition

![]()

|

Chapter 5: Publicizing Your Site (Without Irritating Everyone on the Net)by Philip Greenspun, part of Database-backed Web Sites

Note: this chapter has been superseded

by its equivalent in the new edition

|

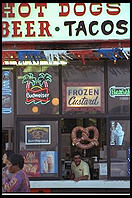

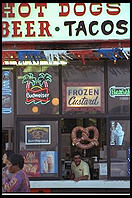

A good way to publicize your site is to buy a 60-second commercial

during the Super Bowl. A black screen with your URL in white should get

the message out. Your users will scribble down the URL on the cover of

Parade magazine and then check it later. Suppose, however, that a

six-pack of Budweiser should be spilled on that copy of

Parade. Your URL is lost to the user unless he roots around in

the Web directories and search engines. Web directories are

manually-maintained listings of sites, organized by category. If your

Super Bowl spot was clear that you are selling beads, then the user

might find http://www.yahoo.com/Business_and_Economy/Companies/Hobbies/Beading/

and follow a link from there to your server. More likely, though, he'll

just go into a search engine and type "beads." This chapter is

about making sure that your site is at the top of the list computed by

the search engine.

A good way to publicize your site is to buy a 60-second commercial

during the Super Bowl. A black screen with your URL in white should get

the message out. Your users will scribble down the URL on the cover of

Parade magazine and then check it later. Suppose, however, that a

six-pack of Budweiser should be spilled on that copy of

Parade. Your URL is lost to the user unless he roots around in

the Web directories and search engines. Web directories are

manually-maintained listings of sites, organized by category. If your

Super Bowl spot was clear that you are selling beads, then the user

might find http://www.yahoo.com/Business_and_Economy/Companies/Hobbies/Beading/

and follow a link from there to your server. More likely, though, he'll

just go into a search engine and type "beads." This chapter is

about making sure that your site is at the top of the list computed by

the search engine.

The search engine's job is to produce a private view of the World Wide Web where links are sorted by relevance to the user's current interest. So if the user types "history of Soho London" then he'd expect to get a page of links to pages detailing the history of this neighborhood. The search engine user will bypass "entry tunnels" and bloated cover page GIFs and go right to the most relevant content anywhere on your Web server. That's the theory anyway.

All the search engines have three components (figure 5-1). Component 1 grabs all the Web pages that it can find. Component 2 builds a huge full-text index of those grabbed pages. Component 3 waits for user queries and serves lists of pages that match those queries. Your Web server deals with Component 1 of the public search engines. When you are surfing http://altavista.digital.com or http://www.webcrawler.com, you are talking to Component 3 of those search engines.

All the search engines have three components (figure 5-1). Component 1 grabs all the Web pages that it can find. Component 2 builds a huge full-text index of those grabbed pages. Component 3 waits for user queries and serves lists of pages that match those queries. Your Web server deals with Component 1 of the public search engines. When you are surfing http://altavista.digital.com or http://www.webcrawler.com, you are talking to Component 3 of those search engines.

Figure 5-1: A generic Web search engine. Note that these are logical components and might all be running on one physical computer. buy a copy on real dead trees if you want to see this figure!

Each search engine's crawler has a database of URLs it knows about. When time comes to rebuild the index, the crawler grabs every URL in this database to see if there are any changes or new links. It follows the new links, indexes the documents retrieved, and will eventually recursively follow links from those new documents.

If your site is linked from an indexed site, you do not have to take any action to get indexed. For example, Brian Pinkerton's original WebCrawler knew about the MIT Artificial Intelligence Laboratory home page (http://www.ai.mit.edu), grabbed a list of Lab employees linked from the page then followed a link from there to my personal home page (http://philip.greenspun.com). As soon as I finished Travels with Samantha (http://webtravel.org/samantha), I linked to it from my home page. WebCrawler eventually discovered the new link, followed it and then followed the links to individual chapters. The full text of my book was indexed without me ever being aware of WebCrawler's action.

This Web is getting larger and the search engine crawlers have a tough time keeping up. It might be six months before a crawler revisits your page to see if anything has changed, though the more aggressive ones try to do it once every six weeks. If you are impatient to get your site indexed and/or you recently changed a lot of content and/or nobody is linking to you, it is worth using the "add my URL" forms on the search engine sites. The specific URLs that you enter will be available to querying users within a few days (AltaVista) or weeks (WebCrawler).

Table 5.1 shows the word-frequency histogram for the first sentence of Anna Karenina.

Table 5.1

Example of Word Frequency Histogram

Word

Frequency

all

1

another

1

but

1

each

1

families

1

family

1

happy

1

in

1

is

1

its

1

one

1

own

1

resemble

1

unhappy

2

way

1

You might think that this sentence makes better literature as "All happy families resemble one another, but each unhappy family is unhappy in its own way," but the computer finds it more useful in this form.

After the crude histogram is made, it is typically adjusted for the prevalence of words in standard English. So, for example, the appearance of resemble is more interesting to the engine than happy because resemble occurs less frequently in standard English. Words that are very common, such as is, are thrown away altogether.

The query processor is the public face of a search engine. When the query machine gets a search string, such as "platinum mines in New Zealand," the in and probably the New are thrown away. The engine delivers articles that have the most occurrences of platinum and Zealand. Suppose that Zealand is a rarer word than platinum. Then a Web page with one occurrence of Zealand is favored over one with one occurrence of platinum. A Web page with one occurrence of each word is preferred to an article where only one of those words shows up. This is a standard text retrieval algorithm in use since the early 1980s.

For relatively stupid indexer/query processor pairs, this is where the sorting stops. Smarter engines, however, use some further knowledge about the Web. For example, they know that

A good way to measure the thoroughness of the corruption of the Internet is to type a query like "most reliable car" into the search engines (see Figure 5-2). Then look at the banner ads in the results pages. Do you think it is a coincidence that the banner ad above the search results is always for a car-related Web site? People are buying words. Yes, words. For thousands of dollars per month. Publishers pay the big bucks and every time a user queries for "car" or "home" or "money", a relevant banner ad is served up.

Figure 5-2: Result of typing "most reliable car" into http://www.lycos.com. Note the banner ad on top for DealerNet. It is not a coincidence that a banner ad for a car-related site was served along with the search results.

If you can't afford to buy words then you will just have to earn exposure in the search engines the honest way: content. If you craft your page carefully, then your URL will appear in plain text, if not at the very top of the page, then at least just below some richer publisher's banner ad.

Search engines take an even dimmer view of graphics than I do. You might have paid $25,000 for a flashing GIF or a Java animation. AltaVista does not care. It won't even download the ugly thing. You might have thought that lots of little GIFs with pretty text were better than plain old ASCII, but AltaVista doesn't think so. Search engines don't try to do OCR on GIFs to figure out what words are formed by the pixels. So if you've invested a sufficient amount in graphic design, your page won't be indexed at all.

What you want is text, text, text.

The more text on your site, the more words and therefore the greater chance that you'll have a combination of words for which users are searching. If you want readers to find you in the search engines, it's much better to spend $20,000 licensing the full text of a bunch of out-of-print books than on a graphical makeover of your site.

Does advertising on the Web work? If what you're advertising is another Web site, the answer seems to be yes. Buying words on the search engines seems to be good value. At a cost of about $5,000/week, one of my consulting clients pumped their site up very quickly from 100,000 to 300,000 hits/per day by buying words.

How much does advertising cost? Web publishers seem to be able to charge between one and four cents per impression (showing the banner ad to a user) and five to ten cents per click-through (when a user actually clicks on the banner).

I get about 5,000 visitors a day on my personal site. If I wanted an extra 5,000, it would cost me about $400 a day to pull them in from other sites by buying banner ads. On the other hand, if you look at Chapter 2, you'll see that amazon.com managed to acquire 2,641 click-throughs from me for $3.95. That's only $0.0015 for each click-through. So if I had some kind of transaction system on my site and promised other Web publishers a kickback, I could apparently pull in those extra 5,000 readers for only $7.50/day.

Hmmm, I guess I should stop writing now and get to work on a transaction system and associates program…

Often the user's browser will tell your Web server the URL from which the user clicked to your site. If you have a reasonably modern Web server program, it can log this referrer header. (yes, "referer" is misspelled in the HTTP standard).

Sometimes the referer URL will contain the query string. The very first time I ran a referer report on a server log was on a commercial site. I was all set to e-mail it to "the suits upstairs" when I looked a little more closely at one line of the report. We were giving away "Cosmo Hunk calendars" where each month there was a picture of Fabio or something. A WebCrawler user had grabbed this page and the referer header gave us some real insight into his interests

http://www.webcrawler.com/cgi-bin/WebQuery?searchText=hunks+with+big+dicks&maxHits=25

I decided not to use this particular report to demonstrate my powerful new logging system.

Note: Macmillan decided not to print the above section in the paper edition. Read The book behind the book behind the book... to get the dirt.

Sometimes a user talks to the search engine via HTTP POST instead of GET. That makes the referer header much less interesting.

www-aa0.proxy.aol.com - - [01/Jan/1997:18:57:21 -0500] "GET /photo/nudes.html HTTP/1.0" 304 0 http://webcrawler.com/cgi-bin/WebQuery "Mozilla/2.0 (Compatible; AOL-IWENG 3.0; Win16)"

We know that this user is an AOL Achiever because he is coming to my site from an AOL proxy server. We know that he is at least mildly naughty because his WebCrawler search has come up with "http://photo.net/photo/nudes.html" as an interesting URL for him. And the user-agent header at the end supposedly tells us that he is using Netscape Navigator (Mozilla) 2.0, though the "compatible" indicates in fact that perhaps he is using Microsoft Internet Explorer

Here's an AltaVista user:

modem22.truman.edu - - [01/Jan/1997:23:41:08 -0500] "GET /photo/body-paint.html HTTP/1.0" 200 7667 http://www.altavista.digital.com/cgi-bin/query?pg=q&what=web&fmt=.&q=body+painting+-auto+-automobile+-repair "Mozilla/3.01 (Win95; I; 16bit)"

This user is more advanced. He's not using AOL. He's making a direct connection from his machine at Truman State University (Missouri). At first glance, it appears that he's had a problem with his car because he is searching for "body painting auto automobile repair". Won't he be surprised that AltaVista sent him to the rather naughty http://photo.net/photo/body-paint.html? Actually he won't be. I showed this to my friend Jin and he said "look at the little minuses in front of auto, automobile, and repair. He was looking for documents that contained body and painting but NOT any of the auto repair words.

Sometimes the Web really does work like it should…

245.st-louis-011.mo.dial-access.att.net - - [01/Jan/1997:20:50:31 -0500] "GET /cr/maps/ HTTP/1.0" 302 361 http://www-att.lycos.com/cgi-bin/pursuit?cat=lycos&query=Costa+Rica%2Bmap&matchmode=or "Mozilla/2.02E (Win95; U)"

This fellow, apparently an ATT Worldnet user, wanted a map of Costa Rica and found it at http://webtravel.org/cr/maps/.

The bottom line is that, if you have a content-rich site, you should be getting approximately 50 percent of your users from search engines.

If you want to take the time to add META elements to the HEAD of your HTML documents, then most search engines will try to learn from them. If you have some extra keywords that you think describe your content, but that don't fit into the article or don't get enough prominence in the user-visible text, just add

<META name="keywords" content="making money fast greed">

to your page (remember that it is only legal within the <HEAD> of the document). People who do this tend to repeat the words over and over: <META name="keywords" content="making money fast greed money money money money money money money money fast fast fast greed">

which presumably does increase relevance—and therefore prominence—on badly-programmed search engines. Eventually the search engine programmers are going to get tired of seeing the sleaziest sites given the most prominence, though, and only index each keyword once (AltaVista currently records 0, 1, and "2 or more" occurrences of a word, so "money money" and "money money money" are indistinguishable).

Keep in mind also that, though information in META elements is never displayed on a page, all of your users will have to wait for these META tags to download. So you don't really want to put 50,000 bytes of text in the keywords tag (AltaVista in any case will only index the first 1,024 bytes).

A potentially more useful META tag is "description": <META name="description" content="Journal for sophisticated Web publishers, specializing in RDBMS-backed sites.">

Normally a search engine will condense the textual content of your site into something resembling a description. Perhaps it would take the first 25 words and serve that up along with the title. This becomes especially problematic if you have a graphics-heavy site with no content at all. If the first few sentences of a page aren't what you'd like people to see when a search engine offers it up as an option, then include a description META tag on that page.

That's all how it is supposed to work. Of course, immediately people started to subvert the system by adding keyword tags like "nude photos of supermodels" to their boring computer science research papers and sleazy get-rich quick schemes.

A more clever approach to getting extra hits is reprogramming your Web server. The server first determines if a request is being made by a robot by looking at the user-agent header and/or matching the hostname against *.webcrawler.com, *.altavista.digital.com, *.lycos.com, etc. If the client is a robot, then the server delivers content calculated to match queries, e.g., "making money fast on the Internet by taking photos of nude supermodels." Otherwise, on the assumption that the request is coming from a real person, the server redirects the client to a page of your choice.

You've made the Web a less user-friendly place, but you've got more hits. If every publisher did this then people would stop using search engines, but of course not every publisher is going to do this.

Sometimes you don't want search engines to find your stuff. Here are some possible scenarios:

WebCrawler was the first robot on the Web. The folks who've taken it over from Brian Pinkerton maintain a Robots page that gives information about how Web robots work at http://info.webcrawler.com/mak/projects/robots/robots.html

This page links to the Standard for Web Exclusion, which is a protocol for communication between Web publishers and Web crawlers. You the publisher put a file on your site, accessible at "/robots.txt", with instructions for robots. Here's an example that addresses my mirror site problem given above. I created a file at http://euro.webtravel.org/robots.txt containing the following:

User-agent: * Disallow: /samantha Disallow: /philg Disallow: /cr Disallow: /nz Disallow: /webtravel Disallow: /bp Disallow: /~philg Disallow: /zoo Disallow: /photo Disallow: /summer94

The "User-agent" line specifies for which robots the injunctions are intended. Each "Disallow" asks a robot not to look in a particular directory. Nothing requires a robot to observe these injunctions but the standard seems to have been adopted by all the major indices nonetheless.

If you hire an MBA to "proactively leverage your Web publishing paradigm into the next generation model" then you can be pretty sure he will come up with the following brilliant idea: Require registration. As soon as you require users to register, you can give much more detailed information about them to your advertisers.

Unfortunately, if half of your readers have been coming from search engines, you'll only have half as many readers. AltaVista is not going to log into your site. Lycos is not going to fill out your demographics form. WebCrawler does not know what its username and password are supposed to be on www.greedy.com.

I don't have an MBA but I managed to hide a tremendous amount of my content from the search engines by stupidity in a different direction. I built a question and answer forum for http://photo.net/photo. Because I'm not completely stupid, all the postings were stored in a relational database. I used the AOLServer with its brilliant TCL API to get the data out of the database and onto the Web. The cleanest way to develop software in this API is to create files named "foobar.tcl" among one's document tree. The URLs end up looking like "http://photo.net/bboard/fetch-msg.tcl?msg_id=000037".

So far so good.

AltaVista comes along and says, "Look at that question mark. Look at the strange .tcl extension. This looks like a CGI script to me. I'm going to be nice and not follow this link even though there is no robots.txt file to discourage me."

Then WebCrawler says the same thing.

Then Lycos.

I achieved oblivion.

Then I had a notion that I developed into a concept and finally programmed into an idea: Write another AOLServer TCL program that presents all the messages from URLs that look like static files, e.g., "/fetch-msg-000037.html" and point the search engines to a huge page of links like that. The text of the Q&A forum postings will get indexed out of these pseudo-static files and yet I can retain the user pages with their *.tcl URLs. I could convert the user pages to *.html URLs but then it would be more tedious to make changes to the software (see my discussion of why the AOLserver *.tcl URLs are so good in the next chapter).

It was easy in the old days. In 1994 there was just Yahoo. Yahoo was the original Web directory, reasonably well-built and well-maintained by David Filo and Jerry Yang, electrical engineering graduate students at Stanford. You'd submit your site to Yahoo and NCSA What's New and wait for traffic and links to develop organically.

By 1995 there was Yahoo and a bunch of pathetic wannabe imitation directories. There were too many entries in NCSA What's New for anyone to bother reading them so NCSA shut down the page.

By 1997 some of the pathetic wannabes had become so bloated with money and staff that they actually had pretty reasonable directories. In this competition they were aided by Yahoo's apparent inability to write a perl script to grind over their database and flush all the obsolete links.

If you are unwilling to figure out who is running all of the directories these days, then it is probably worthwhile to use a service that submits your site information to the directories for you.. Here are a few:

Don't obsess over getting listed in every possible directory. In the long run the search engines will be much more important sources of users than the directories. Furthermore, it is much easier as a publisher to work with the search engines. They visit your site periodically and notice if things have changed.

Reorganize your file system after you're listed in all the Web directories and search engines. That way users will be sure to get "404 Not Found" messages after finding your site in Yahoo or WebCrawler.

Here's what you should have learned in this chapter:

If you are smart about managing your profile in the search engines and Web directories, you'll have so much traffic that your server will melt unless you carefully read the next chapter on how to choose server hardware and software.

Note: If you like this book you can move on to the other chapters.

Here's a twist on organizing your site before the search engines find you:I started a humble home page / website with my ISP, GTE. At GTE, all non-commercial homepages have a mandatory URL http://home1.gte.net/USER_NAME/index.htm where USER_NAME is of course your login name. You can have hundreds of pages, but you must have one page with this URL. Naturally, most people use it as the base home page.

The site had been up for a month, when my wife was conducting a job interview. She asked about the candidates interests, he said he liked music. She said, "You should visit our website, my husband likes music, too." She wrote down the URL for him.

He didn't want the job. A week later, he calls in and leaves a message on her voice-mail. He has visited the site, and did not think it appropriate that she be giving out the URL, as it's quite offensive. This would have been baffling, except that he read the URL into the voice-mail. He had typed USERNAME instead of USER_NAME (the names have been changed to protect the innocent) and landed on someone else's home page, only to be confronted with hard-core porn!

Embarrasing lesson learned. Now the mandatory URL of http://home1.gte.net/USER_NAME/index.htm just has a pointer link to the "real" home page of http://home1.gte.net/USER_NAME/NoPlaceLikeHome.htm This latter "NoPlaceLikeHome" is what we use in signature files or any other way that we pass the site URL along. Since the page filename is fairly unique, any "fat fingering" of the path name will give a 404 error, and not dump the user into uncharted waters.

-- Todd Peach, June 8, 1998

Two comments First about the statement "You might have paid $25,000 for a flashing GIF or a Java animation. AltaVista does not care. It won't even download the ugly thing."Believe me I hate to add even one extra hit to the following pathetic site so take my word for it and don't go find it. If you type in the string "moron123" on AltaVista, the search will result in, believe it or not, only one page. AltaVista does not return this page based on any actual textual content,

tag content or <meta> tag content. moron123 is a GIF image called moron123.GIF. Just wanted to point out at least AltaVista does NOW consider the names of graphic files. <p> Secondly www.siteannounce.com is one of the better Search Engine Submission services I have used. After trying various other services and not being the least bit satisfied, I used these guys and was surprised to recieve and e-mail from an actual consultant suggesting several "legitimate" ways that the script in the Web Site I was submitting could be optimized. I'm not quite sure if it was the help I was given by the SiteAnnounce.com consultant or the fact that I had changed my content which resulted in a nice little increase in traffic for my client. None-the-less he addressed many issues stated in this chapter and several more. <p> Ta Ta <br><br> -- <A HREF="/shared/community-member?user_id=22207">Mike Saunders</a>, November 10, 1998</blockquote> <blockquote> Two comments First about the statement "You might have paid $25,000 for a flashing GIF or a Java animation. AltaVista does not care. It won't even download the ugly thing." <p> Believe me I hate to add even one extra hit to the following pathetic site so take my word for it and don't go find it. If you type in the string "moron123" on AltaVista, the search will result in, believe it or not, only one page. AltaVista does not return this page based on any actual textual content, <title> tag content or <meta> tag content. moron123 is a GIF image called moron123.GIF. Just wanted to point out at least AltaVista does NOW consider the names of graphic files. <p> Secondly www.siteannounce.com is one of the better Search Engine Submission services I have used. After trying various other services and not being the least bit satisfied, I used these guys and was surprised to recieve and e-mail from an actual consultant suggesting several "legitimate" ways that the script in the Web Site I was submitting could be optimized. I'm not quite sure if it was the help I was given by the SiteAnnounce.com consultant or the fact that I had changed my content which resulted in a nice little increase in traffic for my client. None-the-less he addressed many issues stated in this chapter and several more. <p> Ta Ta <br><br> -- <A HREF="/shared/community-member?user_id=22207">Mike Saunders</a>, November 10, 1998</blockquote> <center><a href="/comments/add?page_id=1614">Add a comment</a> | <a href="/links/add?page_id=1614">Add a link</a></center> <script type="text/javascript"> var _gaq = _gaq || []; _gaq.push(['_setAccount', 'UA-315149-1']); _gaq.push(['_trackPageview']); (function() { var ga = document.createElement('script'); ga.type = 'text/javascript'; ga.async = true; ga.src = ('https:' == document.location.protocol ? 'https://ssl' : 'http://www') + '.google-analytics.com/ga.js'; var s = document.getElementsByTagName('script')[0]; s.parentNode.insertBefore(ga, s); })(); </script></body></HTML>